Discovery of a New Particle at 125 GeV: Physics, Technology and Cyberinfrastructure

Harvey B Newman [*]

High energy physics experiments at the Large Hadron Collider (LHC) have begun to explore the fundamental properties of the forces and symmetries of nature, and the particles that compose the universe in a new energy range. In operation since 2009, the LHC experiments have distributed hundreds of petabytes of data worldwide. Many thousands of physicists analyze tens of millions of collisions daily, leading to weekly publications of new results in peer-reviewed journals.

The complexity and scope of the experimental detector facilities, the data acquisition, computing and software systems are all unparalleled in the scientific community. Many petascale data samples of events are extracted daily from hundreds of trillions of proton-proton collisions and are explored by thousands of physicists and students located at hundreds of sites interlinked by high speed networks around the world searching for new physics signals, supported by many hundreds of computer scientists and engineers. In spite of these challenges both of the two largest experiments, CMS and ATLAS, have optimized their analyses in many channels and produced groundbreaking results with a speed unprecedented in the field. The other major experiments at the LHC, ALICE and LHCb, also have made great strides and produced a vast array of new results in heavy ion and flavor physics respectively.

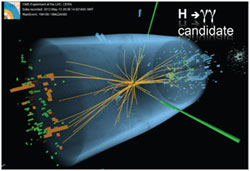

Figure 1 A candidate event for the decay of a Higgs boson to two photons, one of the distinctive modes used for the discovery.

A major milestone on July 4, 2012 was the simultaneous discovery by ATLAS and CMS of the "Higgs" or a "Higgs-like" boson, culminating the 40+ year search for such a particle thought to be responsible for the masses of elementary particles. A typical candidate event in the two photon decay channel, illustrated in Figure 2, is one of several decay modes analyzed for this discovery. One of the next major steps along this generation-long program of exploration is the determination of whether the new particle is the scalar (spin zero, positive parity) Higgs boson of the Standard Model (SM) of particle physics, or an "impostor" with somewhat different properties (spin and other quantum numbers, or its production and decay rates) opening a new era of physics beyond the Standard Model. It is also possible that this is the SM Higgs, but its production is modified by the existence of undiscovered heavy new particles that contribute to the gluon-fusion loop diagram that is the dominant production mode.

Irrespective of the discoveries to come, perhaps as early as this year as the experiments expect to triple their collected data, physicists already know that the SM is incomplete. It cannot explain the nature of dark matter, for example, nor can it hold at the energies that prevailed in the early universe. The leading candidate alternative theory is Supersymmetry (SUSY), but direct searches have already ruled out much if not most of the SUSY parameter space accessible with 8 TeV collisions, for the simplest SUSY models. And a "Higgs" of mass 125 GeV is difficult to produce in such models. It is nearly "too heavy" to be accommodated, and so particular models with relatively light third-generation SUSY particles, or other models with multiple Higgs particle types or new yet-undiscovered scalar or vector (spin 1) particles have been proposed.

For the next decades, CMS and ATLAS and the other LHC experiments will therefore continue to advance their search for a wide variety of new phenomena that could point the way to that more fundamental theory. In addition to the higher energies that will bring greater physics reach, when the LHC moves to 13-14 TeV in 2015 and onward, there are many other keys to the search and future discoveries: more sophisticated algorithms to cope with the increasingly severe conditions of "pileup" from multiple interactions in a single crossing of proton bunches in the LHC collider and the increasing radiation exposure of the detector elements as the LHC luminosity and energy increase, and the means to cope with ever larger data volumes and more massive computing and networking needs.

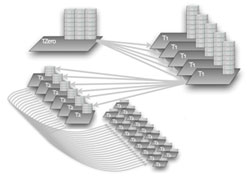

Figure 2: Traditional hierarchical model of the first generation WLCG as the LHC went into operation. Computing resources and data channels follow a tiered organization expressed in a grid paradigm, with strict service roles provided at and between each level of the hierarchy. This model has recently given way to a more agile data access and transport model with a richer set of wide area connections among sites in different layers and in different world regions.

Until now the computing challenges have been met successfully, after years of preparation and field-testing, by the Worldwide LHC Computing Grid (WLCG), which provides access to the Open Science Grid (OSG), EGI and NorduGrid; the LHC experiments have seamlessly combined the resources of more than a hundred computing centers around the globe. On average, a few hundred thousand jobs run simultaneously, accessing hundreds of petabytes of deployed storage worldwide and utilizing hundreds of gigabits/sec of network bandwidth using a hierarchical grid model depicted in Figure 2. Scientists access these computing resources transparently through distributed computing systems developed by their collaborations. These distributed software systems are highly flexible and are evolving to meet the needs of thousands of physics users, in many cases by investing them with increasing degrees of intelligence and an increased level of communications performance.

Even though the distributed computing model for the LHC experiments has proven to be extraordinarily successful, we anticipate that the software stacks will need to be replaced within the next few years. The current system will not scale to the complexity and challenges of exabyte-scale data. Human resources needed to operate the hardware and software infrastructure cannot be linearly scaled tenfold along with the data. There are still many barriers to efficient data intensive scientific computing that need to be overcome. The revolutionary changes for the new distributed computing model need to start immediately, to be ready for LHC data-taking at the design energy in 2015 and beyond.

Sophisticated and robust statistical methods have been developed and used to tease out and fairly gauge the appearance of significant new physics signals from large and potentially overwhelming backgrounds. These so-far-successful methods will not scale as needed in the latter part of this decade, particularly if and when the first experimental hints of a more fundamental theory of nature break. If signs of a new theory do emerge, then rapidly finding and characterizing it, in a vast space of candidate models with millions of possible decay chains and billions of possible parameter sets, each to be compared in many dimensions to massive volumes of data, will require new artificially intelligent methods and new orders of magnitude of computational power. The output of this program will be a new set of predictions to be tested through a new multiyear round of targeted analyses, in order to pin down the new theory.

Along the way, CMS and ATLAS and the other detectors will undergo major upgrades, which together with larger data volumes and higher collision energies this will provide greater reach in the search for new physics as well as fertile ground to train the next generation of scientists and engineers skilled in one or many disciplines: in new methods of signal extraction and background rejection; in new methods of hypothesis testing and model selection (finding that more fundamental theory in a vast theory and in parameter space); learning to create, operate and harness a new level and scale of grid-based systems and the dynamic networks, monitoring systems and control planes that underpin them; and in developing a new generation of collaboration systems and learning how to use them effectively for cooperative daily work on multiple scales, from small groups working round the clock to daily working meetings to weekly collaboration meetings involving hundreds of sites.

[*] I have been privileged to contribute to the LHC program, and its successes including the recent "discovery milestone" in many ways. Our group in CMS works on Higgs searches, the search for Supersymmetry and many other forms of exotic new physics, as well as the calorimeters that precisely measure electrons, photons, jets and missing energy transverse to the beam line, and the trigger that makes online decisions on which events to keep in real time. Beyond that our Caltech team has created or made central contributions to major elements of "cyberinfrastructure" including: the initiation of international networking for HEP from 1982 on, and US LHCNet since 1995; the LHC worldwide Computing Model from 1998, the EVO (Enabling Virtual Organizations) global collaboration system since 1994; the MonALISA monitoring and control system since 2000; and the NSF-funded DYNES dynamic circuit network project being deployed at 50 campuses as well as the LHC Open Network Environment LHCONE program since 2010.

Harvey Newman (newman@hep.caltech.edu) is a Professor at Caltech, a high-energy physics experimentalist and past Chair of the FIP. He is also engaged in work on Digital Divide issues in many regions of the world.

Disclaimer - The articles and opinion pieces found in this issue of the APS Forum on International Physics Newsletter are not peer refereed and represent solely the views of the authors and not necessarily the views of the APS.